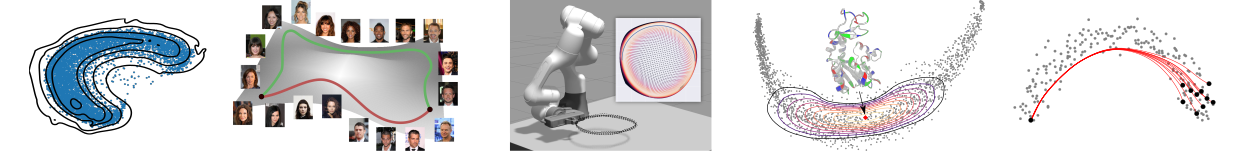

Left to right: We use differential geometry to provide a better prior for VAEs, to encode domain knowledge in generative models for improving interpretability and for robot motion skills. In addition, we develop computationally efficient methods for fitting statistical models and computing shortest paths on Riemannian data manifolds.

A common hypothesis in machine learning is that the data lie near a low dimensional manifold which is embedded in a high dimensional ambient space. This implies that shortest paths between points should respect the underlying geometric structure. In practice, we can capture the geometry of a data manifold through a Riemannian metric in the latent space of a stochastic generative model, relying on meaningful uncertainty estimation for the generative process. This enables us to compute identifiable distances, since the length of the shortest path remains invariant under re-parametrizations of the latent space. Consequently, we are able to study the learned latent representations beyond the classic Euclidean perspective. Our work is based on differential geometry and we develop computational methods accordingly.

Geometric priors in latent space Since the latent space can be characterized as non-Euclidean, we replace the standard Gaussian prior in Variational Auto-Encoders (VAEs) with a Riemannian Brownian motion prior, relying on an efficient inference scheme. In particular, our prior is the heat kernel of a Brownian motion process, where the normalization constant is trivial, and also we can easily generate samples and back-propagate gradients using the re-parametrization trick [ ].

Enriching the latent geometry The ambient space of a generative model is typically assumed to be Euclidean. Instead, we propose to consider it as a Riemannian manifold, which enables us to encode high-level domain knowledge through the associated metric. In this way, we are able to control the shortest paths and improve the interpretability of the learned representation. For instance, on the data manifold of human faces, we may influence the shortest path to prefer the smiling class while moving optimally on the manifold, by using an appropriate Riemannian metric in the ambient space [ ].

Probabilistic numerics on manifolds In general, operations on Riemannian manifolds are computationally demanding, so we are interested in efficient approximate solutions. We use adaptive Bayesian quadrature to numerically compute integrals over normal laws on Riemannian manifolds. The basic idea is to combine prior knowledge with an active exploration scheme to reduce the number of required costly evaluations. In addition, we develop a fast and robust fixed-point iteration scheme for solving the system of ordinary differential equations (ODE), which gives the shortest path between two points. The advantage of our approach is that compared to standard solvers, we avoid the Jacobians of the ODE, which is ill-behaved for Riemannian manifolds learned from data [ ].

Robot motion skills In robotic applications, the model learns motion skills such that to function in unstructured enviroments, it should be able to generalize under dynamic changes of the environment. For example, if an obstacle is introduced during the action, the robot should avoid it, while performing the task that it is supposed to do. We assume that human demonstrations span a data manifold on which shortest paths constitute natural motion skills. A robot then is able to plan movements through the associated shortest paths in the latent space of a VAE. Additionally, we can simply replace the Euclidean metric of the ambient space with a suitable Riemannian metric to account for dynamic obstacle avoidance tasks (R:SS '21 best student paper award) [ ].