Over the past decade, the paradigm of deep learning has been successful not only in machine learning, but also in the broader scientific community. Substantial effort has gone into the development of deep neural networks, and we have contributed several notable advancements.

Generative modeling In [ ], we improve over variational autoencoders by designing more refined regularization mechanisms, thereby making significant progress towards addressing some of the problems constraining VAEs, e.g., poor sample quality and instable training. Besides VAEs, we have also contributed to the field of generative adversarial networks (GANs). In [ ], we propose and study a boosting style meta-algorithm which builds upon various modern generative models (including GANs) to improve their quality. Further, in [ ], we devise a way to constrain and control the kind of samples produced by a GAN generator. Beyond generative modelling, we have contributed to developing a better understanding of how neural networks work, for instance by exploring similarities and differences between deep neural networks and the human visual system [ ].

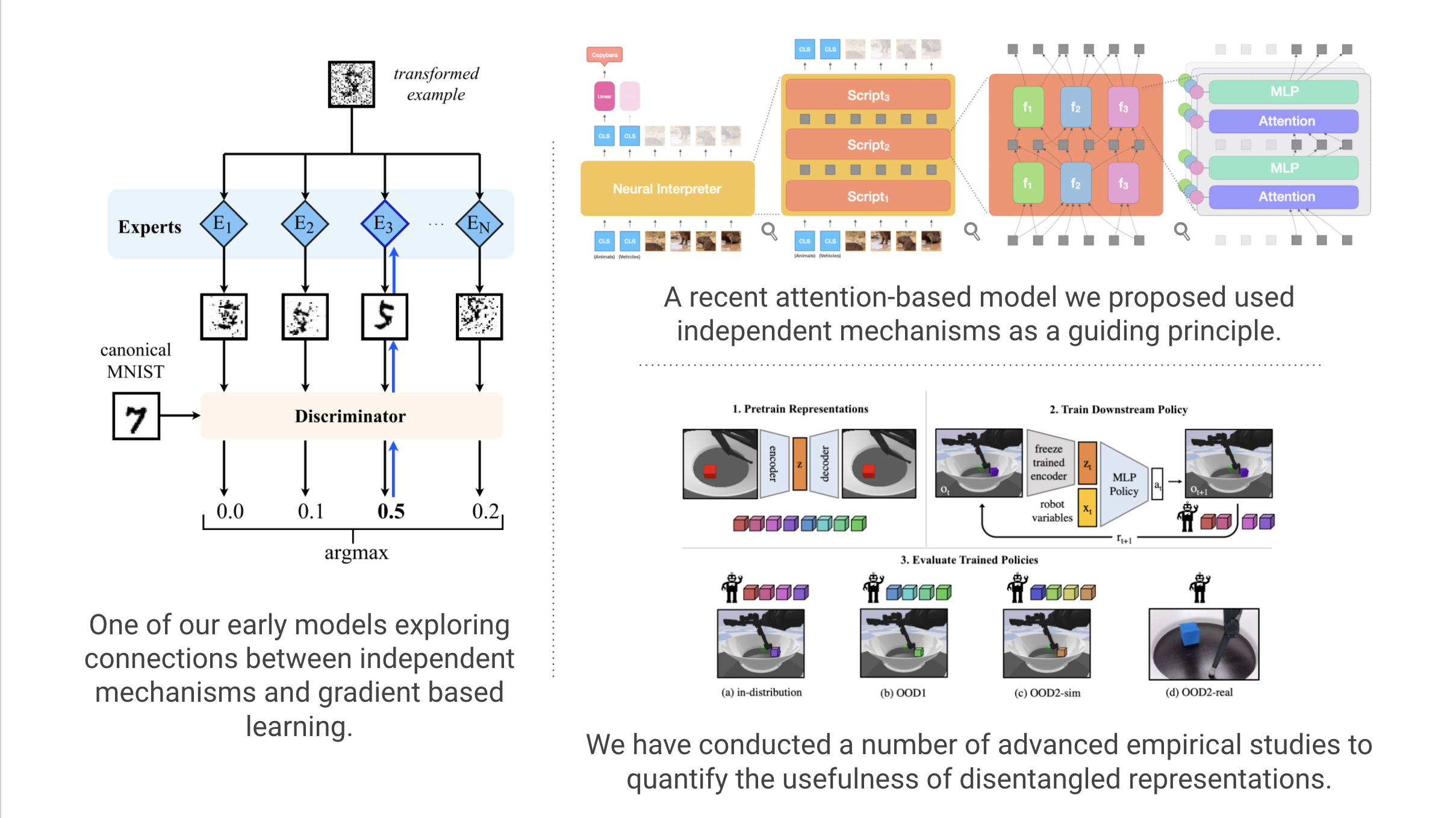

Causal Learning Our research has also pioneered synergies between causality and deep learning. Foundational to these advancements is the principle of independent causal mechanisms (ICM). These mechanisms may interact in interesting and non-trivial ways, but their inner workings are otherwise independent of each other. In [ ], we marry the fundamental notion of ICM with the empirically successful framework of gradient-based learning of neural networks.

In one line of work [ ], we show how attention mechanisms may serve as a vehicle for incorporating the principle of independent mechanisms into neural networks in a scalable manner. This has lead to impactful architectures that are robust out-of-distribution and can adapt more sample-efficiently to new data. In another line of work [ ] we probe disentangled representations using large scale empirical studies that shed light onto the components required to make them work in practice.

Finally, we have hosted various challenges and curated datasets [ ] valuable to the deep learning community, and we have pioneered deep learning methods for hard real-world problems such as gravitational wave parameter inference [ ].