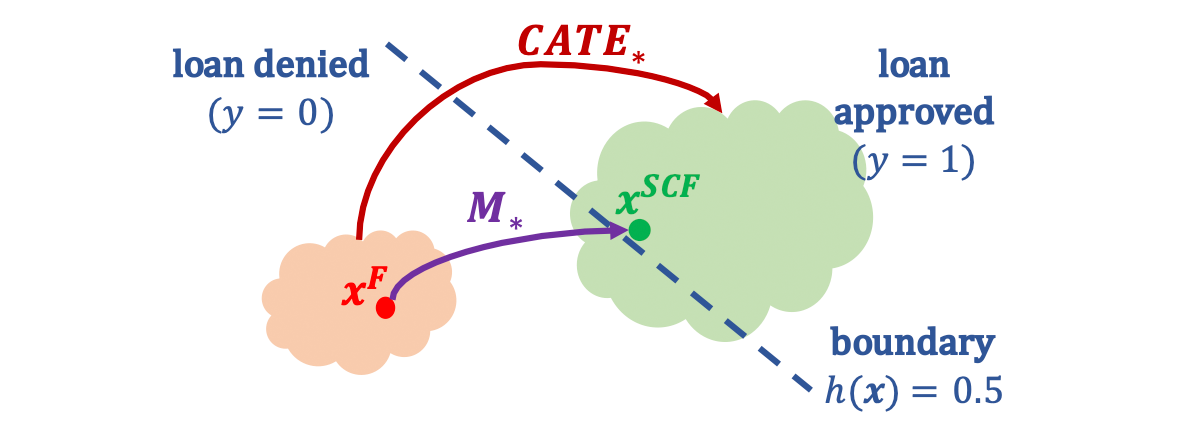

Recourse aims to offer individuals subject to automated decision-making systems a set of actionable recommendations to overcome an adverse situation. Recommendations are offered as actions in the real world governed by causal relations, whereby actions on a variable may have consequential effects on others. This figure illustrates point- and subpopulation-based algorithmic recourse approaches.

This research lies at the intersection of causal inference, explainable AI, and trustworthy ML. Given the increasing use of often intransparent ("blackbox") machine learning models for consequential decision-making, it is of growing societal and legal importance. In particular, we consider the task of enabling and facilitating algorithmic recourse, which aims to provide individuals with explanations and recommendations on how best (i.e., efficiently and ideally at low cost) to recover from unfavorable decisions made by an automated system. We address the following questions:

How can we generate recourse explanations for affected individuals in diverse settings? Several works have proposed optimization-based methods to generate nearest counterfactual explanations (CFE). However, these methods are often restricted to a particular subset of models (e.g., decision trees or linear models), only support differentiable distance functions, or poorly handle mixed datatypes. Building on theory and tools from formal verification, we proposed a novel algorithm for generating CFEs (MACE) [ ], which are: i) model-agnostic; ii) datatype-agnostic; iii) distance-agnostic; iv) able to generate plausible and diverse CFEs for any individual (100% coverage); and v) at provably optimal distances. We also offer another solution with similar characteristics, this time using mixed-integer linear programs [ ].

What actionable insight can be extracted from a CFE? Next, we explored one of the main, but often overlooked, objectives of CFEs as a means to allow people to act rather than just understand. We showed that actionable recommendations cannot, in general, be inferred from CFEs. We formulated a new optimization problem for directly generating minimal consequential interventions (MINT), offering exact recourse under the knowledge of an underlying causal model [ ] and probabilistic recourse when only knowledge of the causal graph is available [ ] or under potential confounding [ ].

How does offering recourse affect other stakeholders? We argue that giving the right of recourse to individuals should not be considered independently of its effect on other stakeholders (e.g., model deployer and regulators), or in relation to other desirable properties (e.g., fairness, robustness, privacy, and security). In a follow-up work, we explore the fairness and robustness of causal algorithmic recourse, positioning these properties as complementary (but not implied through) fair and robust prediction [ ]. Our experiments show how one may obtain recourse generating solutions that are fair and robust. Finally, we summarized the state of the field of recourse in a review paper [ ].