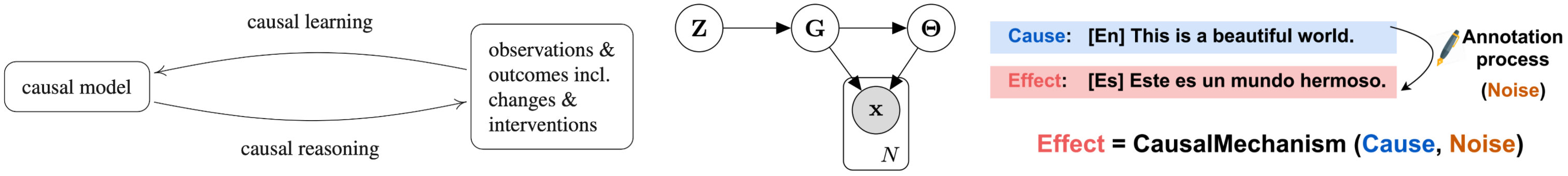

(left) In the terminology of our recent book [ ], causal inference comprises both causal reasoning and causal learning/discovery: the former employs causal models for inference about expected observations (often, about their statistical properties), whereas the latter is concerned with inferring causal models from empirical data. Some recent work includes: (center) a fully-differentiable Bayesian causal discovery method [ ] (NeurIPS'21 spotlight), and (right) an application of causal reasoning to NLP [ ] (EMNLP'21 oral).

Both causal reasoning and learning crucially rely on assumptions on the statistical properties entailed by causal structures. During the past decade, various assumptions have been proposed and assayed that go beyond traditional ones like the causal Markov condition and faithfulness. This has implications for both scenarios: it improves identifiability of causal structure and also entails additional statistical predictions if the causal structure is known.

Causal reasoning Under suitable model assumptions (here: additive independent noise), causal knowledge admits novel techniques of noise removal such as the method of half-sibling regression published in PNAS [ ], applied to astronomic data from NASA's Kepler space telescope [ ].

Apart from entailing statistical properties for a fixed distribution, causal models also suggest how distributions change across data sets. One may assume, for instance, that structural equations remain constant and only the noise distributions change [ ], that some of the causal conditionals in a causal Bayesian network change, while others remain constant [ ], or that they change independently [ ], which results in new approaches to domain adaptation [ ].

Based on the idea of no shared information between causal mechanisms [ ], we developed a new type of conditional semi-supervised learning [ ]. More recently, we showed that causal structure can explain a number of published NLP findings [ ], and explored its use in reinforcement learning [ ].

Causal learning/discovery We have further explored the basic problem of telling cause from effect in bivariate distributions [ ], which we had earlier shown to be insightful also for more general causal inference problems. A long JMLR paper [ ] extensively studies the performance of a variety of approaches, suggesting that distinguishing cause and effect is indeed possible above chance level.

For the multivariate setting, we have developed a fully differentiable Bayesian structure learning approach based on a latent probabilistic graph representation and efficient variational inference [ ]. Other new results deal with discovery from heterogeneous or nonstationary data [ ], meta-learning [ ], employing generalized score functions [ ], or learning structural equation models in presence of selection bias [ ], while [ ] introduced a kernel-based statistical test for joint independence, a key component of multi-variate additive noise-based causal discovery.

Apart from such 'classical' problems, we have extended the domain of causal inference in new directions: e.g., to assay causal signals in images by inferring whether the presence of an object is the cause or the effect of the presence of another [ ]. In a study connecting causal principles and foundations of physics [ ], we relate asymmetries between cause and effect to those between past and future, deriving a version of the second law of thermodynamics (the thermodynamic `arrow of time') from the assumption of (algorithmic) independence of causal mechanisms.

Within time series modeling, new causal inference methods reveal aspects of the arrow of time [ ], or allow for principled causal feature selection in presence of hidden confounding [ ]. We have applied some of these methods to analyse climate systems [ ] and Covid-19 spread [ ], see also [ ].

Causal Representation Learning

Deep neural networks have achieved impressive success on prediction tasks in a supervised learning setting, provided sufficient labelled data is available. However, current AI systems lack a versatile understanding of the world around us, as shown in a limited ability to transfer and generalize between tasks.

| The course focuses on challenges and opportunities between deep learning and causal inference, and highlights work that attempts to develop statistical representation learning towards interventional/causal world models. The course will include guest lectures from renowned scientist both from academia as well as top industrial research labs. |

The course covers amongst others the following topics:

- Causal Inference and Causal Structure Learning

- Deep Representation Learning

- Disentangled Representations

- Independent Mechanisms

- World Models and Interactive Learning

Grading

The seminar is graded as pass/fail. In order to pass the course, participants need to write a summary of at least one lecture (n lectures if the team consists of n team members) and write reviews for at least two submissions (2*n reviews if the team consists of n team members). Course summaries and reviews will have to be submitted through openreview (link to be provided). For the assignment to a particular lecture a survey will be sent around. The course summary has to follow the NeurIPS format, however with 4 pages of text (references and appendix are limited to an additional 10 pages). Please find the template for the seminar below.

Seminar template: Download

The submission deadline: January 15, 2021.

Time and Place

|

Lectures |

Tue, 16:00-18:00 |

Online |

The zoom link for the online lectures will be send by email to registered students at ETH. If you have not done so please register for the course.

Questions

If you have any questions, please use the Piazza group: piazza.com/ethz.ch/

Please submit your lecture notes here: https://openreview.net/group?

Lecture notes: Download (Disclaimer: These summaries were written by students have not been reviewed / proofread by the lecturers).

Syllabus

| Day | Lecture Topics | Lecture Slides | Recommended Reading | Background Material |

| Sep 15 | Introduction | Lecture 1 | Elements of Causal Inference |

Pearl, Judea, and Dana Mackenzie. The book of why: the new science of cause and effect. Basic Books, 2018. |

| Sep 22 | Lecture 2 | Elements of Causal Inference | ||

| Sep 29 |

|

Lecture 3 | Elements of Causal Inference | |

| Oct 6 | Lecture 4 | Guest: Sebastian Weichwald | ||

| Oct 13 | Lecture 5 | Guest: Francesco Locatello | ||

| Oct 20 | Lecture 6 | Opening AI Center - no lecture | ||

| Oct 27 | Lecture 7 | Guest: Ilya Tolstikhin | ||

| Nov 3 | Lecture 8 | Guest: Irina Higgins | ||

| Nov 10 | Lecture 10 | Guest: Patrick Schwab | ||

| Nov 17 | Lecture 11 | Guest: Ferenc Huszár | ||

| Nov 24 | Lecture 12 | Guest: Patrick Schwab | ||

| Dec 1 | Lecture 13 | Guest: Anirudh Goyal | ||

| Dec 20 | Lecture 14 | Guest: Silvia Chiappa |

Primary References

B. Schölkopf. "Causality for machine learning." arXiv preprint arXiv:1911.10500 (2019).

J. Peters, D. Janzing, and B. Schölkopf. Elements of causal inference. The MIT Press, 2017.

Additional references

Pearl, Judea. Causality. Cambridge university press, 2009.

Hernán, Miguel A., and James M. Robins. "Causal inference: what if." Boca Raton: Chapman & Hill/CRC 2020 (2020).