Wittawat Jitkrittum

Alumni

Note: Wittawat Jitkrittum has transitioned from the institute (alumni). Explore further information here

I am now a research scientist at Google Research. Please see wittawat.com for the most up-to-date information.

I was a postdoctoral researcher with Bernhard Schölkopf between 2018-2020. My research topics include kernel methods, nonparametric hypothesis testing, approximate Bayesian inference, and generative models. I am particularly interested in the problem of comparing two probability distributions on the basis of their samples (i.e., two-sample testing). A computationally efficient distance measure for two distributions has many practical applications beyond just comparing distributions. For instance, such a distance can be used to create a dependence measure between two random vectors. A dependence measure in turn enables development of algorithms for clustering, feature selection, and dimensionality reduction, to name a few. See Jitkrittum et al., 2016 (NeurIPS), Jitkrittum et al., 2017 (ICML), and Jitkrittum et al., 2017 (NeurIPS, best paper).

More recently, I have been working on a new method for comparing relative goodness of fit of two models. Given two models (two generative adversarial networks, for instance) and a reference sample, the goal is to determine which of the two models fits the sample better. This problem can be formulated as a hypothesis test. See the paper Jitkrittum et al., 2018 (NeurIPS). Several further extensions of this seting exist. A preliminary work for comparing two latent variable models is available here. When the number of candidate models is more than two, there is no unique way to address this setting. We studied two approaches in our recent paper (to appear in NeurIPS 2019 soon): 1. based on post selection inference, 2. based on multiple correction.

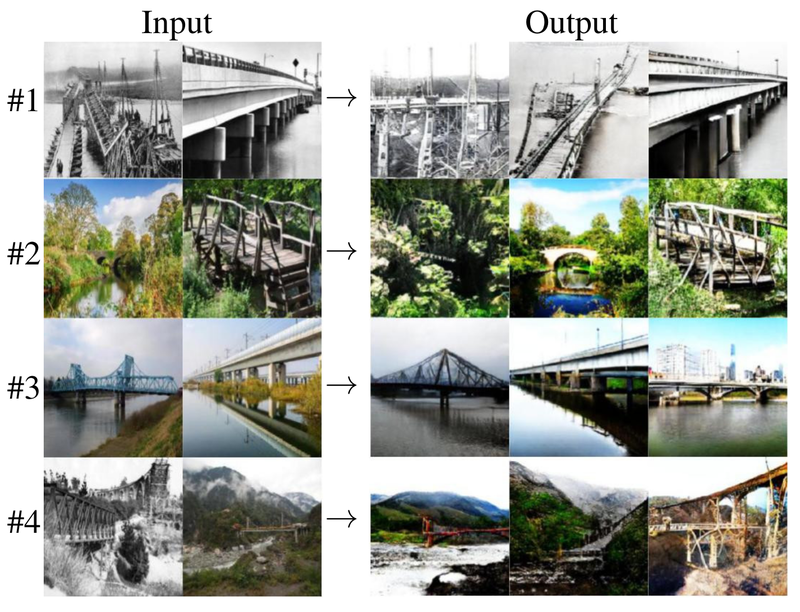

In the direction of generative modelling, a problem that has not received much intention is the task of generating images that are similar to an input set of images, a task we call content-addressable image generation. A key challenge here is that the input is a set of arbitrary size, and that there is no order over the input images. In our ICML 2019 paper, we proposed a procedure (based on kernel mean matching) that allows a pre-trained generative model to perform content-addressable generation without retraining.

I release source code of most of my projects. See my Github page.

kernel methods hypothesis testing generative models model comparison